As someone who tests AI technologies for a job, I’ve seen models develop quickly—sometimes overnight. ChatGPT-4.5 vs Gemini Pro 2.5 are two of the best in the game right now, and they’ve both received significant modifications in recent months.

On paper, both are formidable multimodal assistants that can assist with everything from writing and coding to picture analysis and productivity. But which one performs better in critical situations?

To find out, I offered both models the same five prompts, deliberately chosen to represent jobs that many people use AI for on a daily basis: summarizing, brainstorming, answering hard questions, generating visuals, and providing personal support.

I didn’t anticipate perfection; I expected differences. What I didn’t foresee was how specific challenges revealed strengths (and idiosyncrasies) that provided one model an unexpected advantage.

Here’s how ChatGPT-4.5 and Gemini Pro 2.5 compared — and why you might choose one over the other based on how you operate.

1. Summarization & compression

Given the task: “Summarize this New York Times article in under 100 words and give me one key takeaway I might not catch on my own.” (I published the article “5 Science-Backed Longevity ‘Hacks’ That Don’t Cost a Fortune”)

ChatGPT-4.5 produced a succinct summary of under 100 words as required. It offered a clear, perceptive main point regarding the impact of social relationships in lifespan, which corresponded to the requirement for something the reader could overlook.

Gemini 2.5 Pro supplied a general overview of the article but failed to achieve the primary criteria because it didn’t summarize the actual article or provide a distinctive takeaway.

ChatGPT wins for directly answering the question, providing a concise overview and a considered key takeaway. Gemini’s response this time was really disappointing.

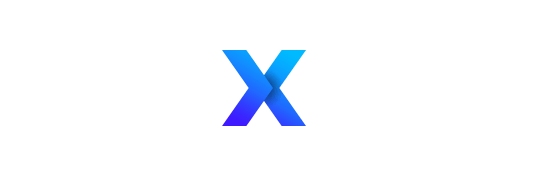

2. Creative writing & tone adaptation

Answer the question: “Write a product description for a retro-style smartwatch that sounds like it was written by Taylor Swift.”

ChatGPT-4.5 struck a mix between retro and tech, describing the watch’s appearance while promoting smart functions. The copy is entertaining and lyrical, reminiscent of a Taylor Swift song.

Gemini 2.5 Pro had powerful Swiftian visuals that felt like Folklore/Evermore period. The copy evokes nostalgia effectively. However, the copy lacks an obvious call to action.

ChatGPT wins for nailing Taylor’s voice and producing engaging product content. Gemini’s copy was prettier, but less practical, which felt more like a poem than proper sales packaging.

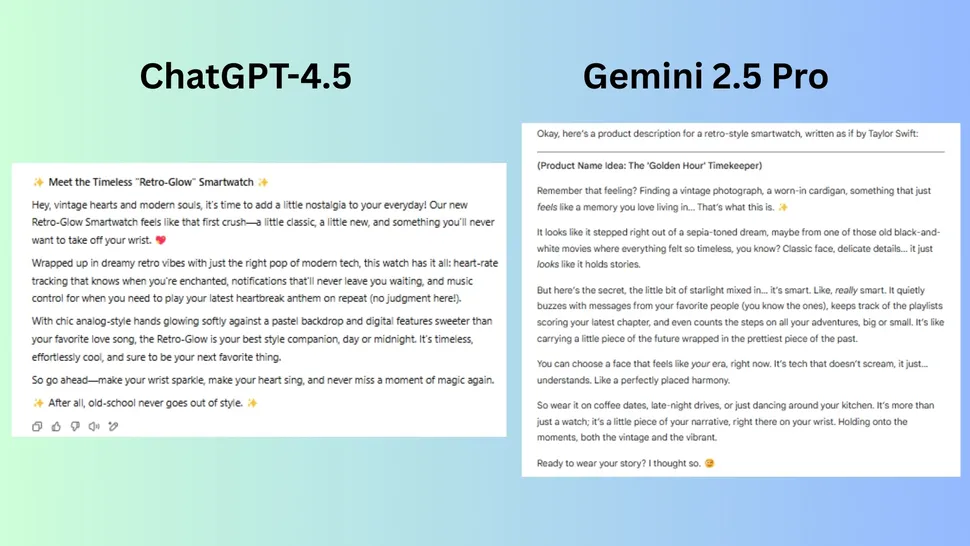

3. Analytical reasoning

Answer the question: “Explain quantum computing to a 12-year-old using analogies, but also include one paragraph for adults who want the technical details.”

ChatGPT-4.5 provided a straightforward and punchy example for children. It was brief yet accurate, suited to children’s attention span. The technical components are streamlined, with a focus on concepts rather than math or algorithms.

Gemini 2.5 Pro used a kid-friendly comparison as well as metaphors to make superposition and entanglement more apparent. It draws a clear contrast between classical and quantum computers.

Winner: ChatGPT is recognized for balancing clarity in a way that both children and adults can understand. While Gemini’s response is satisfactory, it overexplains, which may be inappropriate for a child.

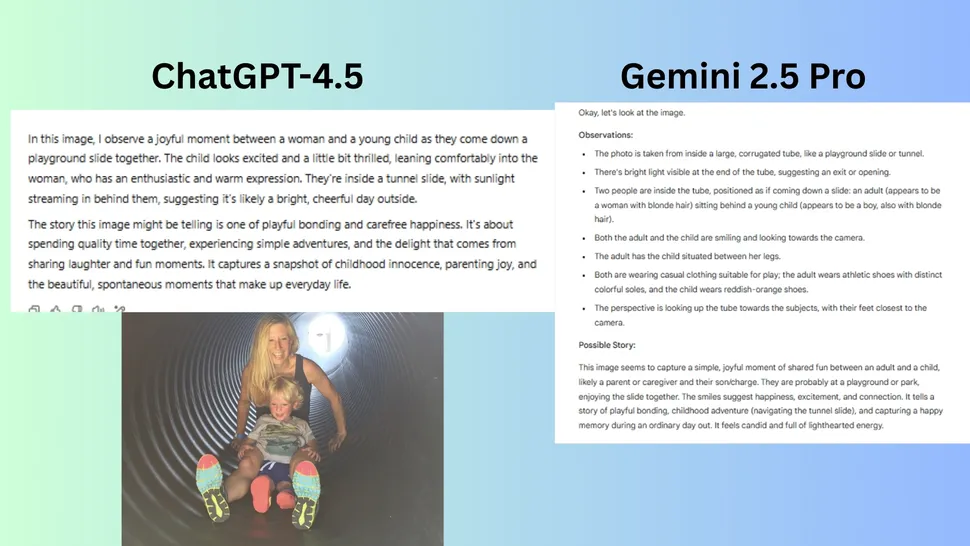

4. Image generation interpretation

Answer the question: “Based on this image, tell me what you observe and what story it might be telling.” I posted a photo of my son and me going down a tube together at a farm playground.

ChatGPT-4.5 elicited emotion by emphasizing the warmth and joy, resulting in richer narrative by framing the experience as a “snapshot of childhood innocence” and “parenting joy,” which is more descriptive.

Gemini 2.5 Pro made a detailed inspection of specific aspects such as the corrugated tube, shoes, and apparel. It accurately describes the atmosphere and the interaction between my child and me. The storytelling element was there, as the event was described as “joyful bonding.”

ChatGPT wins because it better combines the details into a captivating and emotionally resonant story.

5. Task organization

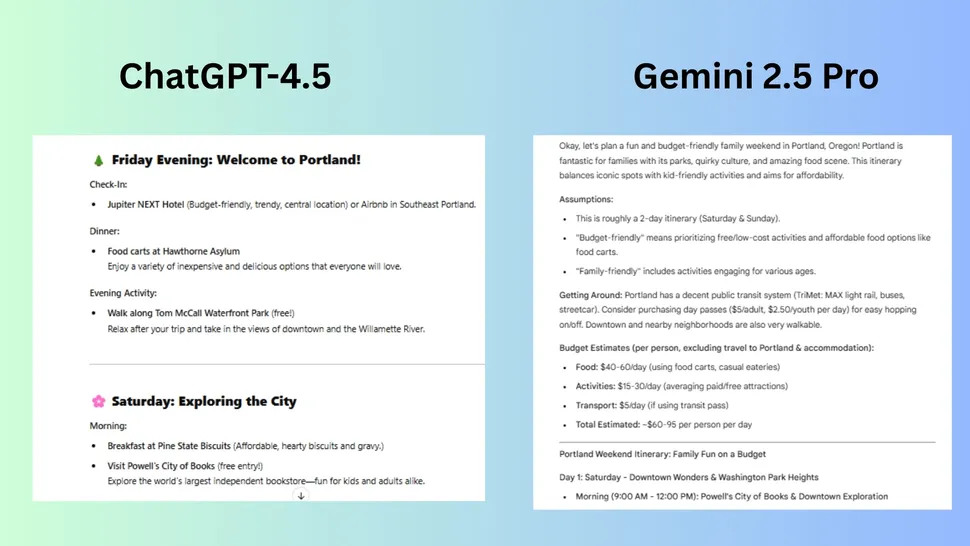

Ask Me: “Help me plan a weekend trip to Portland, Oregon, including a budget itinerary, places to eat and family-friendly activities.”

ChatGPT-4.5 developed a straightforward, cost-effective program with a family-friendly focus. It provided a variety of kid-friendly locations as well as an estimated budget breakdown.

Gemini 2.5 Pro provided a highly detailed itinerary with local knowledge and various possibilities. The budget was transparent, with a clear breakdown of cost estimates.

Winner: Gemini wins for providing a more practical approach that includes everything from transportation directions to emergency plans. There were painstaking and detailed components of a journey that a user may have overlooked.

Final verdict: ChatGPT wins

After comparing Gemini and ChatGPT on five different prompts, ranging from summarizing articles and writing marketing copy to explaining complex issues and planning a trip, it’s evident that both chatbots excel in various areas.

ChatGPT excels in creativity, storytelling, and concise communication, making it excellent for engaging narratives and brief, understandable explanations.

Gemini excels at precise logistics, technical precision, and systematic planning, as well as providing in-depth discussions on specific themes.

While it is evident that the “better” chatbot is determined on the task at hand, both offer winning features. I recommend using them together. They address a wide spectrum of demands, from casual creativity to meticulous problem-solving, and in many cases, a combination of the two chatbots could meet user needs even better.